Randomization maximalism

Too much of a good thing is not good

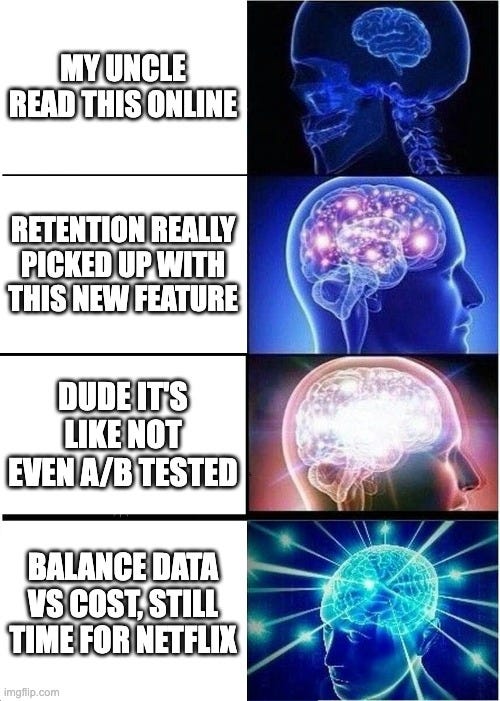

Some folks are “randomization maximalists.”

In tech, randomization maximalists insist on A/B testing every new feature. “If it’s not A/B tested, it can’t be trusted,” they may say.

In science, randomization maximalists only take randomized experiments seriously. You may recognize them by their sneers whenever you mention “observational data.”

I think such maximalism is misguided.

To be good Bayesians, we should incorporate all available evidence, weighing the evidence by its quality. There’s nothing in Bayes’ formula that says “only evidence from randomized experiments counts.”

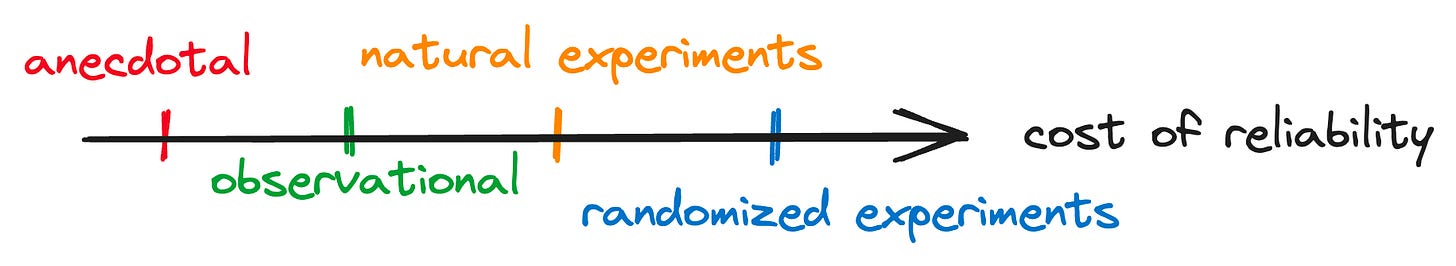

Now, for quality, we can roughly rank evidence as follows:

Randomized experiments;

Natural experiments;

Observational data;

Anecdotal data.

Here, items at the top of the list are more reliable. So, other things equal, we prefer data on vaccine efficacy from a randomized double-blind trial to the opinions of an unhappy uncle.

In an ideal world, we would have costless access to the most reliable data (i.e., randomized experiments). That, alas, is not the world we live in.

Consider this example. It’s 11 pm, and you want to set the alarm for a rainy morning. You have noticed that the bus often seems to be late when it rains. That’s clearly not high-quality data. Maybe you’re grumpy when it rains, and everything just seems to take longer. Sure, you could cross-reference municipal timeliness reports with weather data… only it’s late, you’re already tired, and you’d rather watch Netflix. Randomization is also plainly impossible: You can’t manipulate weather on a planetary scale to understand its impact on local bus services. So, you set the alarm 15 minutes early and call it a night.

The example may seem silly, but the point is general: We must trade off the costs of higher-quality data against the benefits of extra reliability.

A simple two-by-two matrix may help. Put “cost of reliability” on the x-axis, and “value of information” on the y-axis:

More reliable evidence tends to be more costly. Hence, our hierarchy above may look like this in terms of the “cost of reliability”:

The previous “late bus on a rainy day” example is a case of “low value of information, high cost of reliability”:

Vaccine trials are a classic example of “high value of information, high cost of reliability”:

Most A/B tests in tech have a fairly low cost of reliability, putting them in the top-left quadrant:

Something in the bottom-left corner could be “your team’s favorite ice cream flavor.” It’s easy to collect reliable data—just ask your team—but how wrong can you really go with salted caramel?

To me, this “value of information vs cost of reliability” framework is a much more practical way to approach real-world problems than randomization maximalism.

To be clear: I don’t mean to suggest that reliability should be ignored. On the contrary, we need the most reliable data for critical decisions. We also need infrastructure that allows the highest possible reliability for a given level of cost. However, it’s OK to also use observational and even anecdotal data, as long as we understand their limitations.

In the vast spectrum of life’s choices, not everything warrants the randomized treatment. Some decisions must rely on gold-standard evidence. For others, a gut feeling or an anecdote is good enough.

Let’s move beyond randomization maximalism and embrace a more nuanced, pragmatic approach to decision-making.