Don't Run Coibion-Gorodnichenko Regressions with Micro Data

Noise is this regression's worst enemy.

You should never run Coibion-Gorodnichenko regressions with micro data. Many people do. But you shouldn’t.

Here’s why.

What’s a Coibion-Gorodnichenko regression anyway?

In a trail-blazing paper, Olivier Coibion and Yuriy Gorodnichenko proposed an innovative approach for measuring expectation stickiness.

Here’s how it works. Let’s define:

Coibion and Gorodnichenko suggested regressing forecast errors on past forecast revisions:

If people are rational and have perfect information, β should be zero. That’s a direct implication of the orthogonality property: If you use information optimally, I should be unable to predict your forecast errors with any variable that you had available when making your forecasts.

There’s nothing groundbreaking here. People have run similar regressions for ages. In fact, Nobel-prize-laureate William Nordhaus suggested the same “forecast errors on forecast revisions” regression back in 1987!

The critical contribution of Coibion and Gorodnichenko was not “hey, you can, like, regress forecast errors on forecast revisions.” Instead, the actual contribution was twofold:

They showed that if people are rational but have imperfect information, such as in sticky-information or noisy-information models, β will be positive for average expectations. For individual expectations, the estimated β should still be zero.

They showed how to recover structural parameters of models with such “information rigidities” from the estimated β.

That’s the beauty of the Coibion-Gorodnicheko paper: We can use simple regression to estimate the deep parameters of models with information rigidities.

Empirically, Coibion and Gorodnicheko found statistically-significant positive estimates of β. Count that as a win for models in which expectations respond to news sluggishly.

The story takes an unexpected turn

In 2018, Pedro Bordalo, Nicola Gennaioli, Yueran Ma & Andrei Shleifer released a working paper with the provocative title of “Over-reaction in Macroeconomic Expectations”:

Wait, what? Overreaction in macro expectations? Huge if true, as they say.

What Bordalo, Gennaioli, Ma & Shleifer did was brilliantly simple. They took the Coibion-Gorodnichenko regression and estimated it using individual forecaster data. That is, let:

Then, they ran the micro Coibion-Gorodnichenko regression:

That’s almost identical to what Coibion and Gorodnichenko did. The only difference is that now we’re using individual instead of average forecasts.

What a big difference this tiny change makes, however. While most estimates of the Cobion-Gorodnichenko regression are positive for average expectations, they turn negative for individual forecasts:

This paper has been very influential: It was published in the American Economic Review, the leading journal in economics, and has garnered almost 400 citations.

The problem with micro Coibion-Gorodnichenko regression

OK, here’s the thing: The micro Coibion-Gorodnichenko regression is extremely sensitive to noise in expectations.

Recall the regression:

The time-t forecast enters both the left- and right-hand side of the regression but with a different sign.

If individual forecasts are measured with error, that measurement error simultaneously enters both sides of the equation. Technically, the regression suffers from a non-standard measurement error problem.

Usually, measurement error is pretty benign, and it just makes finding an effect more difficult. However, that’s not the case here. For example, if the true β is zero, but expectations are measured with error, the estimated β will be negative.1 Simply put, this non-standard measurement problem arises from the interaction between the measurement errors on both sides of the regression equation, leading to a biased estimate of β.

Actually, that’s also known. In our own research, we made this point in two separate papers, already published in respectable journals. There’s even a paper by Alexandre N. Kohlhas and Ansgar Walther in the American Economic Review that makes the same point empirically:

Kohlhas and Walther show that the negative coefficient disappears when you remove just 1% of outlier observations.

But what about robustness tests?

It’s not that Bordalo, Gennaioli, Ma & Shleifer were unaware of this problem. They were—there’s a dedicated robustness section for that.

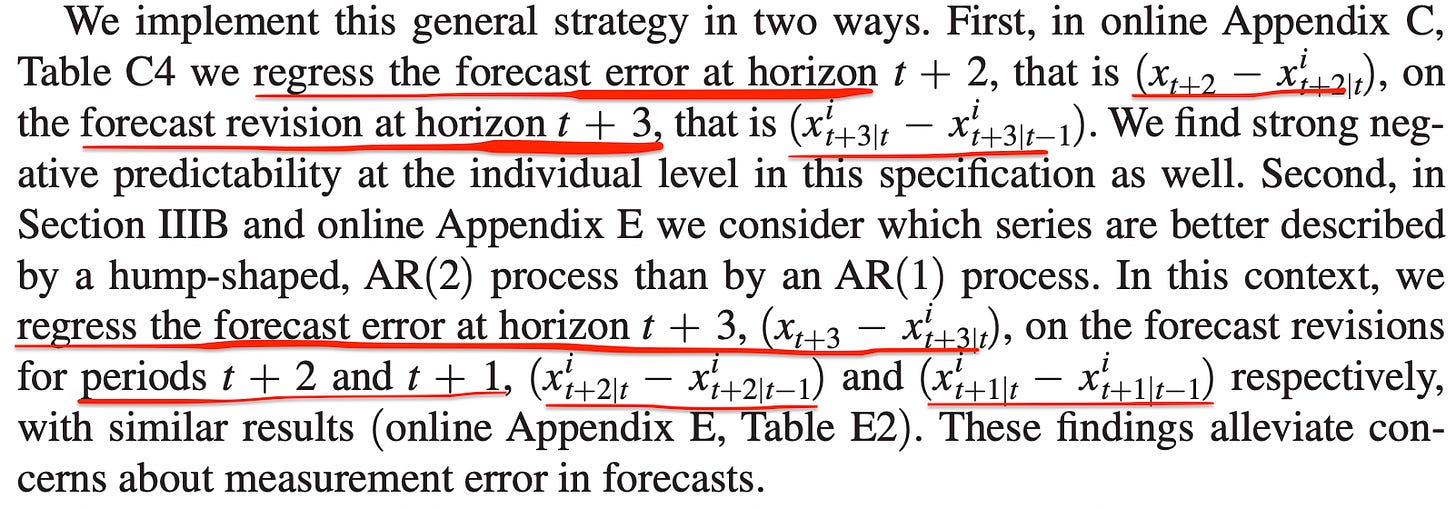

Basically, these authors regressed forecast errors on forecast revisions for a different time horizon:

Unfortunately, this robustness test is not very robust.

This test assumes that measurement error is uncorrelated across forecasting horizons. That is, if, today, I predict inflation for 2024Q1 and 2024Q2, any measurement error in my forecast for 2024Q1 is uncorrelated with the measurement error for the 2024Q2 forecast. But that’s not plausible.

In fact, in our paper with Artūras Juodis, we found that noise in expectations is strongly correlated across forecasting horizons:

So, the main assumption that this robustness test rests on is violated.

Conclusions and recommendations

The upshot is that the micro Coibion-Gorodnichenko regression is very sensitive to noise in expectations.

To be clear: Noise in expectations does not need to be all due to measurement error. Likely, some of that noise is just that people, you know, are noisy.

However, the interpretation of the estimates becomes significantly different. It’s not that “individual expectations overreact, consensus expectations underreact.” Instead, the Cobion-Gorodnichenko regression means different things when applied to different data:

When applied to average forecasts, the Coibion-Gorodnichenko regression tells us how expectations react to aggregate shocks;

When applied to individual data, the Coibion-Gorodnichenko regression mostly tells us how forecast errors respond to idiosyncratic noise in expectations. (In our work with Florian Peters, we found that the micro Coibion-Gorodnichenko regression places around 80% of the weight on idiosyncratic noise.)

My take is that, due to this noise sensitivity, you should never apply the Coibion-Gorodnichenko regression on micro data. Better methods exist, such as the impulse-response approach we proposed in our recent work.

However, if you really insist on running micro Coibion-Gorodnichenko regressions, you should make sure that:

Your results hold up when you winsorize your data (at, say, 5% level);

Your results hold up when you remove outliers (say, 1% of the data);

You understand the extent to which your micro-level results depend on idiosyncratic noise. For that, you can estimate the decomposition proposed in our paper with Florian Peters.

I want to conclude with a personal reflection. If I were still in academia, I would probably have not published this post. The fear of criticizing prominent and influential academics can be pretty daunting, especially when you’re just starting out as a researcher. However, I think it's crucial to engage in open and honest debate, regardless of the stature of those involved.

Here's to hoping this post contributes to that ongoing discussion.

See, e.g., Section 4.2 here for the math.